Getty Images

Until now, even AI companies have struggled to find tools that can reliably detect when a text was generated using a large language model. Now, a group of researchers has developed a new method to estimate LLM usage in a large set of scientific literature by measuring which “excess words” began appearing much more frequently during the LLM era (i.e., in 2023 and 2024). The results “suggest that at least 10% of abstracts in 2024 were treated with LLMs,” the researchers said. In a preliminary paper published earlier this month, four researchers from Germany’s University of Tübingen and Northwestern University said they drew inspiration from studies that measured the impact of the COVID-19 pandemic by looking at excess deaths compared to the recent past. Looking similarly at “excessive word usage” after LLM writing tools became widely available in late 2022, the researchers found that “the advent of LLMs led to a sharp increase in the frequency of certain style words” that was “unprecedented in both quality and quantity.”

Dive into

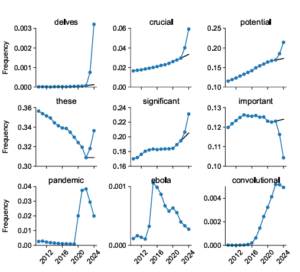

To measure these vocabulary changes, the researchers analyzed 14 million abstracts of articles published on PubMed between 2010 and 2024, tracking the relative frequency of each word as it appeared each year. They then compared the expected frequency of these words (based on the trend before 2023) to the actual frequency of these words in abstracts from 2023 and 2024, when LLMs were widely used.

The results revealed a number of words that were extremely rare in these scientific abstracts before 2023 and suddenly gained popularity after the introduction of LLMs. The word “delves,” for example, appears in 25 times more articles in 2024 than the pre-LLM trend would have expected; words like “showcase” and “underscores” also increased ninefold. Other previously common words became significantly more common in post-LLM abstracts: the frequency of “potential” increased by 4.1 percentage points, that of “findings” by 2.7 percentage points, and that of “crucial” by 2.6 percentage points, for example.

These changes in word usage can of course occur independently of LLM usage; the natural evolution of language means that words sometimes go in and out of fashion. However, the researchers found that prior to LLM, such massive and sudden year-on-year increases were only observed for words related to major global health events: “Ebola” in 2015; “Zika” in 2017; and words like “coronavirus,” “lockdown,” and “pandemic” in the 2020-2022 period.

However, after the LLM, the researchers found hundreds of words that suddenly and dramatically increased in scientific usage that had no connection to world events. In fact, while the words in excess during the COVID pandemic were overwhelmingly nouns, the researchers found that the words that increased in frequency after the LLM were overwhelmingly “style words” like verbs, adjectives, and adverbs (a small sample: “across, additional, comprehensive, crucial, enhancement, exposed, insights, especially, especially, within”).

This is not an entirely new finding – the increased prevalence of “delve” in scientific papers has been widely noted in recent times, for example. But previous studies have typically relied on comparisons with “verifiable” human writing samples or lists of predefined LLM markers obtained outside the study. Here, the pre-2023 abstract set acts as its own effective control group to show how vocabulary choice has changed overall in the post-LLM era.

A complex interaction

By highlighting hundreds of “marker words” that became much more common after the LLM, the telltale signs of LLM use can sometimes be easy to spot. Consider this example of an abstract line cited by the researchers, with the marker words highlighted: “A complete understanding of the complex interaction between (…) and (…) is pivot for effective therapeutic strategies.

After running some statistical measures of the appearance of keywords in individual articles, the researchers estimate that at least 10% of the articles published after 2022 in the PubMed corpus were written with at least some LLM assistance. That figure could be even higher, the researchers say, because their set may be missing LLM-assisted abstracts that don’t include any of the keywords they identified.

These measured percentages can also vary considerably across different subsets of articles. The researchers found that articles written in countries such as China, South Korea, and Taiwan had LLM marker words in 15% of cases, suggesting that “LLMs may…help non-native speakers edit English texts, which could justify their extensive use.” On the other hand, the researchers suggest that native English speakers “may (simply) be better at noticing and actively removing unnatural style words from LLM results,” thereby hiding their LLM usage from this type of analysis.

Detecting LLM usage is important, the researchers note, because “LLMs are notorious for fabricating references, providing inaccurate summaries, and making false claims that appear authoritative and convincing.” But as knowledge of the telltale LLM keywords begins to spread, human editors may become more effective at removing these words from generated text before it is shared with the world.

Who knows, maybe future big language models will do this kind of frequency analysis themselves, reducing the weight of marker words to better mask their results as human-like. Before long, we may need to call on Blade Runners to spot the AI generative text hiding among us.